Introduction: The Dynamic Relationship Powering Modern AI

The relationship between agents and environment in AI represents one of the most fundamental concepts in artificial intelligence, yet it’s often misunderstood or oversimplified. An AI agent doesn’t exist in isolation—it operates within an environment, constantly perceiving, reasoning, and taking actions based on what it senses. This dynamic interaction forms the backbone of everything from autonomous vehicles navigating city streets to chatbots helping customers, from industrial robots assembling products to intelligent assistants managing your calendar. Understanding how agents interact with their environments is crucial for anyone working with AI in 2026, whether you’re a developer building the next generation of autonomous systems or a business leader deciding where to deploy AI solutions.

In 2026, the field of agentic AI is experiencing explosive growth, with Gartner predicting that 40% of enterprise applications will embed AI agents by year’s end, up from less than 5% in 2025. The market is surging from $7.8 billion to a projected $52 billion by 2030, driven by breakthroughs in how agents and environment in AI interact more seamlessly than ever before. Unlike earlier AI systems that required constant human guidance, today’s agents can sense their surroundings, reason about complex goals, and take purposeful actions autonomously. This evolution from passive tools to active participants represents a paradigm shift in artificial intelligence, one that’s transforming industries from healthcare to manufacturing, finance to customer service. To truly understand where AI is heading, we must first grasp the intricate dance between agents and the environments they inhabit.

What Are AI Agents? The Building Blocks of Intelligence

Defining AI Agents in Modern Context

An AI agent is a system or program capable of autonomously performing tasks on behalf of users or other systems by designing its workflow and utilizing available tools. In the context of agents and environment in AI, an agent is any entity that can perceive its environment through sensors (which might be cameras, microphones, data feeds, or software interfaces), process that information, make decisions based on its goals, and take actions that affect its environment.

The term “agentic” comes from “agent,” meaning an entity capable of independent action and decision-making. In AI, agentic describes systems that proactively initiate behavior aligned with defined objectives, extending beyond simple software bots coded for specific functions into intelligent, adaptive entities.

Key characteristics that define AI agents:

- Autonomy: Agents operate independently without constant human supervision

- Reactivity: They perceive their environment and respond to changes in timely fashion

- Proactivity: Agents take initiative to achieve goals, not just react to stimuli

- Social Ability: Many agents can interact with other agents or humans

- Learning: Advanced agents improve performance over time through experience

The Evolution from Simple to Agentic Systems

AI agents have evolved dramatically over decades:

1960s-1980s: Rule-Based Agents

- Simple if-then logic

- Predefined responses to specific inputs

- Limited adaptability to new situations

1990s-2010s: Learning Agents

- Machine learning enables pattern recognition

- Agents can improve from data

- Still primarily reactive, not proactive

2020s-Present: Agentic AI

- Autonomous planning and decision-making

- Multi-step reasoning and problem-solving

- Tool usage and environment manipulation

- Continuous learning and adaptation

According to TileDB’s comprehensive analysis, agentic AI operates as a closed loop that senses its environment, reasons about objectives, chooses actions, and learns from outcomes, then repeats this cycle to iteratively improve performance over time.

Understanding the Environment: Where AI Agents Live and Work

Types of Environments in AI Systems

The environment in agents and environment in AI refers to everything outside the agent itself that the agent can perceive and interact with. Environments can be categorized along several dimensions:

Fully Observable vs. Partially Observable:

- Fully Observable: The agent can sense all relevant aspects of the environment at all times (e.g., a chess-playing AI can see the entire board)

- Partially Observable: The agent has limited or incomplete information (e.g., a self-driving car can’t see around corners or inside other vehicles)

Deterministic vs. Stochastic:

- Deterministic: Given the current state and an action, the next state is completely predictable (e.g., solving a Rubik’s cube)

- Stochastic: Outcomes involve randomness or uncertainty (e.g., stock trading where markets are unpredictable)

Episodic vs. Sequential:

- Episodic: Each action is independent, with no long-term consequences (e.g., spam email classification)

- Sequential: Current decisions affect future states (e.g., autonomous vehicle navigation where each turn affects subsequent options)

Static vs. Dynamic:

- Static: The environment doesn’t change while the agent is deliberating (e.g., crossword puzzles)

- Dynamic: The environment changes in real-time (e.g., multiplayer video games, stock markets)

Discrete vs. Continuous:

- Discrete: Limited number of distinct states and actions (e.g., board games)

- Continuous: Infinite possibilities for states and actions (e.g., robot arm movements in 3D space)

Real-World Environment Examples

Different industries present different environmental challenges for agents and environment in AI:

Healthcare Environments:

- Partially observable (can’t directly see inside patients)

- Stochastic (patient responses to treatment vary)

- Sequential (treatment decisions have long-term consequences)

- Dynamic (patient conditions change continuously)

Manufacturing Environments:

- Often fully observable (extensive sensor coverage)

- Mostly deterministic (controlled conditions)

- Sequential (production steps depend on previous stages)

- Dynamic (assembly lines operate in real-time)

Financial Trading Environments:

- Partially observable (hidden market information)

- Highly stochastic (unpredictable market movements)

- Sequential (trades affect future prices)

- Dynamic (markets change constantly)

Customer Service Environments:

- Partially observable (limited customer context initially)

- Stochastic (customer needs vary unpredictably)

- Episodic or sequential (depending on issue complexity)

- Dynamic (customer mood and situation evolve during interaction)

The Perception-Reasoning-Action Cycle: How Agents Interact with Environments

Step 1: Perception (Sensing the Environment)

The first critical component in agents and environment in AI is perception—how agents gather information about their surroundings.

Perception Methods:

Visual Perception:

- Computer vision for image and video analysis

- Object detection, recognition, and tracking

- Scene understanding and spatial reasoning

- Example: Self-driving cars identifying pedestrians, traffic signs, and road conditions

Auditory Perception:

- Speech recognition and natural language understanding

- Sound classification and acoustic analysis

- Example: Voice assistants like Alexa understanding spoken commands

Textual/Data Perception:

- Natural language processing of written text

- Database queries and API calls

- Log file analysis and pattern detection

- Example: Customer service bots reading support tickets

Sensor Perception:

- Temperature, pressure, motion sensors

- GPS and location data

- IoT device readings

- Example: Smart home systems monitoring room occupancy and climate

IBM’s analysis emphasizes that AI agents rely on interconnected components to perceive their environment, process information, decide, collaborate, take meaningful actions, and learn from experience.

Step 2: Reasoning (Making Sense of Information)

Once an agent perceives its environment, it must reason about what actions to take. This involves:

Goal Identification:

- Understanding what outcomes to achieve

- Prioritizing multiple objectives

- Balancing short-term and long-term goals

Situation Analysis:

- Interpreting sensory data in context

- Identifying relevant patterns and relationships

- Detecting anomalies or unexpected conditions

Planning:

- Breaking complex goals into sub-tasks

- Sequencing actions logically

- Considering multiple possible action paths

- Evaluating risks and benefits of different approaches

Decision-Making:

- Choosing the best action given current information

- Handling uncertainty and incomplete data

- Adapting plans when circumstances change

According to research from TileDB, agentic AI blends structured optimization of reinforcement learning with the flexible reasoning of large language models (LLMs), enabling systems to achieve outcomes with minimal direction by integrating memory, context awareness, and decision-making capabilities.

Step 3: Action (Affecting the Environment)

Actions are how agents influence their environment. The nature of actions depends heavily on the agent’s purpose and its environment:

Physical Actions:

- Robot movements (grabbing, lifting, assembling)

- Autonomous vehicle steering, acceleration, braking

- Drone flight adjustments

- Example: Manufacturing robots performing precise assembly operations

Digital Actions:

- Sending emails or messages

- Creating, updating, or deleting database records

- Triggering workflows in other software systems

- Making API calls to external services

- Example: IT service desk agents automatically provisioning user accounts

Communicative Actions:

- Generating natural language responses

- Asking clarifying questions

- Providing recommendations or explanations

- Example: Customer service chatbots resolving support inquiries

Financial Actions:

- Executing stock trades

- Processing payments

- Approving or denying loan applications

- Example: Algorithmic trading systems buying and selling securities

Step 4: Learning (Improving Over Time)

Modern agents and environment in AI don’t just execute preprogrammed responses—they learn and adapt:

Reinforcement Learning:

- Agents receive rewards or penalties for actions

- Learn which actions lead to better outcomes

- Optimize behavior through trial and error

- Example: AlphaGo learning optimal game strategies through millions of self-play games

Supervised Learning:

- Learn from labeled examples

- Improve accuracy in classification or prediction tasks

- Example: Email spam filters learning from user corrections

Self-Reflective Learning:

- Analyzing past decisions and outcomes

- Identifying mistakes and improving future performance

- Building experience-based knowledge

- Example: Autonomous vehicles learning from near-miss incidents

Transfer Learning:

- Applying knowledge from one domain to similar domains

- Generalizing learned patterns to new situations

- Example: Language models applying grammar rules across different topics

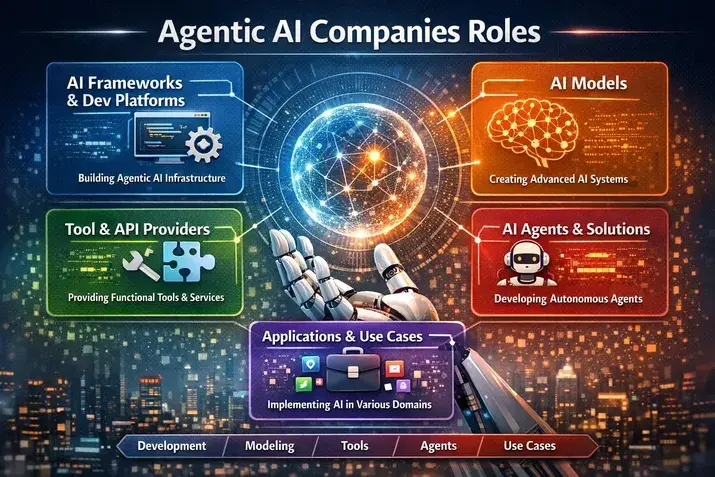

Multi-Agent Systems: When Multiple Agents Share an Environment

The Rise of Collaborative AI

One of the most significant trends in agents and environment in AI for 2026 is the shift toward multi-agent systems where multiple specialized agents work together.

Gartner reported a staggering 1,445% surge in multi-agent system inquiries from Q1 2024 to Q2 2025, signaling a fundamental shift in how organizations design AI solutions. Rather than deploying single all-purpose agents, companies are creating orchestrated teams of specialized agents, each excelling at specific tasks.

Why Multi-Agent Systems?

Specialization Benefits:

- Each agent focuses on specific expertise area

- Better performance than generalist agents

- Easier to develop, test, and maintain individual agents

Scalability:

- Add new capabilities by introducing new agents

- Don’t need to rebuild entire system

- Distribute computational load across multiple agents

Robustness:

- Failure of one agent doesn’t crash entire system

- Agents can cover for each other

- Redundancy improves reliability

Flexibility:

- Mix and match agents for different workflows

- Easily reconfigure for changing business needs

- Swap out underperforming agents

Agent Coordination and Communication

When multiple agents share an environment, coordination becomes critical:

Communication Protocols:

- Agent2Agent (A2A) protocol emerging as standard

- Enables agents from different vendors to communicate

- Prevents vendor lock-in

- Facilitates best-of-breed agent ecosystems

Coordination Mechanisms:

- Central orchestrator directing agent activities

- Peer-to-peer negotiation between agents

- Shared memory or knowledge bases

- Task allocation based on agent capabilities

Conflict Resolution:

- Managing competing goals between agents

- Prioritization when resources are limited

- Ensuring overall system coherence

Real-World Multi-Agent Examples

Enterprise IT Operations: One agent monitors system health, another handles user requests, a third performs automated remediation, and a fourth escalates complex issues to humans. They coordinate through a central orchestration platform, sharing information about ongoing incidents.

Supply Chain Management: Separate agents manage inventory forecasting, order processing, warehouse operations, shipping logistics, and supplier relationships. They communicate to optimize end-to-end supply chain performance.

Healthcare Coordination: Different agents handle patient scheduling, medical records management, diagnostic support, treatment planning, and billing. They collaborate to provide seamless patient care while maintaining regulatory compliance.

For developers and businesses looking to understand the technical foundations of these systems, GeeksforGeeks offers comprehensive tutorials on AI agents and their architectures, providing practical examples and implementation guidance for building effective multi-agent systems.

Real-World Applications: Agents and Environment in Action

Autonomous Vehicles: Navigating Physical Environments

Self-driving cars represent one of the most complex applications of agents and environment in AI:

Environment Characteristics:

- Highly dynamic (constantly changing traffic, weather, pedestrians)

- Partially observable (limited sensor range, occlusions)

- Stochastic (unpredictable driver and pedestrian behavior)

- Continuous (infinite possible positions, speeds, trajectories)

Agent Capabilities:

- Perception: Camera, lidar, radar, GPS sensing road conditions, obstacles, traffic signs

- Reasoning: Path planning, risk assessment, traffic law compliance

- Action: Steering, acceleration, braking, signaling

- Learning: Improving driving strategies from millions of miles of real and simulated driving

Challenges:

- Safety-critical decisions in milliseconds

- Handling edge cases (construction zones, emergency vehicles, unusual weather)

- Interpreting human gestures and intentions

- Ethical dilemmas in unavoidable accident scenarios

Customer Service Agents: Digital Environments

Customer service represents one of the fastest-growing applications in 2026:

Environment Characteristics:

- Partially observable (limited initial customer context)

- Stochastic (unpredictable customer needs and emotions)

- Dynamic (customer mood evolves during interaction)

- Episodic or sequential (depending on issue complexity)

Agent Capabilities:

- Perception: Natural language understanding of customer messages, sentiment analysis, access to customer history and knowledge bases

- Reasoning: Intent recognition, problem diagnosis, solution selection

- Action: Generating responses, querying databases, triggering backend systems, escalating to humans

- Learning: Improving response quality from customer feedback and successful resolutions

Business Impact: Organizations implementing hyperpersonalized, concierge-style AI agents in customer service have achieved double-digit improvements in every metric related to cost and customer satisfaction. Telus reported that more than 57,000 employees use AI agents, saving 40 minutes per interaction.

Manufacturing Robots: Controlled Physical Environments

Industrial robots showcase agents and environment in AI in relatively controlled settings:

Environment Characteristics:

- Often fully observable (extensive sensor coverage)

- Mostly deterministic (controlled conditions)

- Sequential (production steps build on previous work)

- Dynamic but predictable (assembly line operates at known speeds)

Agent Capabilities:

- Perception: Vision systems for part recognition, force sensors for precise manipulation, quality inspection cameras

- Reasoning: Task sequencing, error detection, quality control

- Action: Precise movements, grasping, assembly, welding, painting

- Learning: Optimizing movement patterns, adapting to part variations, predicting maintenance needs

Evolution to Physical AI: IBM’s Peter Staar predicts 2026 will mark a shift toward AI that can sense, act, and learn in real environments. “Robotics and physical AI are definitely going to pick up,” he noted, as the industry seeks AI systems beyond purely digital environments.

Financial Trading: Information-Rich Digital Environments

Algorithmic trading agents operate in complex financial environments:

Environment Characteristics:

- Partially observable (hidden market information, dark pools)

- Highly stochastic (unpredictable market movements)

- Sequential (trades affect future prices)

- Extremely dynamic (millisecond-level changes)

Agent Capabilities:

- Perception: Real-time price feeds, news sentiment analysis, social media monitoring, economic indicators

- Reasoning: Market prediction models, risk assessment, portfolio optimization

- Action: Buy/sell orders, position adjustments, hedging strategies

- Learning: Adapting trading strategies based on market regime changes, identifying profitable patterns

Governance Challenges: Financial regulators increasingly require explainable AI—agents must show their reasoning for trading decisions, creating transparency challenges for complex learning systems.

Designing Effective Agent-Environment Interactions

Matching Agent Capabilities to Environment Characteristics

Success in agents and environment in AI requires careful alignment between agent design and environmental properties:

For Fully Observable Environments:

- Design agents that leverage complete information

- Focus on optimal decision-making algorithms

- Example: Chess engines that can see entire board state

For Partially Observable Environments:

- Implement robust information-gathering strategies

- Build uncertainty modeling capabilities

- Create fallback behaviors for ambiguous situations

- Example: Medical diagnostic agents that request additional tests when uncertain

For Deterministic Environments:

- Use planning algorithms that assume predictable outcomes

- Optimize for efficiency and precision

- Example: Route planning in static maps

For Stochastic Environments:

- Incorporate probability and risk assessment

- Build adaptive strategies that respond to unexpected outcomes

- Include safety margins and conservative assumptions

- Example: Investment portfolio agents accounting for market volatility

For Dynamic Environments:

- Ensure real-time processing capabilities

- Design for rapid decision-making

- Implement continuous monitoring

- Example: Autonomous drones adjusting flight paths for wind gusts

The Importance of Feedback Loops

Effective agents and environment in AI systems require well-designed feedback mechanisms:

Immediate Feedback:

- Direct consequences of actions (robot drops object → gripper pressure was wrong)

- Enables rapid learning and adjustment

- Critical for safety in physical environments

Delayed Feedback:

- Outcomes manifest over time (customer churn three months after poor service)

- Requires sophisticated attribution methods

- Common in business and healthcare applications

Sparse Feedback:

- Feedback only occasionally available (winning or losing a game)

- Requires intelligent exploration strategies

- Challenging for learning algorithms

Noisy Feedback:

- Measurements contain errors or irrelevant information

- Demands robust filtering and statistical methods

- Typical in sensor-rich environments

Handling Environment Complexity

As environments become more complex, agent architectures must adapt:

Hierarchical Reasoning:

- Break complex goals into sub-goals

- Different reasoning levels for strategic, tactical, operational decisions

- Example: Delivery robot with route planning (strategic), obstacle avoidance (tactical), motor control (operational) layers

Modular Perception:

- Separate perception modules for different sensory modes

- Integration layer combines information sources

- Example: Autonomous vehicles fusing camera, lidar, radar, GPS data

Specialized Sub-Agents:

- Deploy multiple agents within single system

- Each handles specific aspect of environment

- Orchestration layer coordinates overall behavior

- Example: Smart home with separate agents for climate, security, entertainment, energy management

Challenges and Limitations in Agent-Environment Interactions

The Reality of Imperfect Perception

No agent perceives its environment perfectly. Understanding limitations is crucial for agents and environment in AI deployment:

Sensor Limitations:

- Cameras affected by lighting conditions, occlusions, resolution

- Microphones capture background noise, miss distant sounds

- Network sensors have latency, occasional packet loss

- GPS unreliable in urban canyons, indoors

Interpretation Errors:

- Computer vision can misclassify objects (especially in unusual conditions)

- Natural language processing may misunderstand ambiguous phrasing

- Anomaly detection generates false positives and negatives

Computational Constraints:

- Processing all available sensor data may be too slow

- Trade-offs between accuracy and speed

- Energy limitations in mobile or embedded agents

Mitigation Strategies:

- Multi-sensor fusion (combining multiple information sources)

- Uncertainty quantification (explicitly modeling confidence levels)

- Human oversight for high-stakes decisions

- Graceful degradation when sensors fail

The Challenge of Unpredictable Environments

Real-world environments rarely behave exactly as anticipated:

Edge Cases:

- Situations not represented in training data

- Novel combinations of familiar elements

- Extreme or unusual conditions

- Example: Autonomous vehicle encountering a moose on highway for first time

Adversarial Behavior:

- Malicious actors deliberately trying to fool agents

- Example: Attackers placing stickers on stop signs to confuse self-driving cars

Concept Drift:

- Environment characteristics change over time

- Agent’s learned models become outdated

- Example: Customer behavior shifting after major economic event

Addressing Unpredictability:

- Continuous learning and model updating

- Anomaly detection and conservative responses to novelty

- Human-in-the-loop for unusual situations

- Extensive testing in diverse scenarios

- Simulation for rare but critical events

Scalability and Performance Issues

As agents and environment in AI systems scale, new challenges emerge:

Computational Demands:

- Complex reasoning requires significant processing power

- Real-time requirements limit deliberation time

- Energy consumption especially critical for mobile agents

Coordination Overhead:

- Multi-agent systems need communication infrastructure

- More agents mean more potential conflicts to resolve

- Orchestration complexity grows super-linearly

Data Management:

- Agents generate vast amounts of state and action logs

- Storage and retrieval of experience for learning

- Privacy and security of sensitive environmental data

Solutions:

- Cloud-edge computing architectures (heavy processing in cloud, time-critical decisions at edge)

- Hierarchical agent organizations (reduce peer-to-peer communication needs)

- Efficient data structures and algorithms

- Selective memory (forgetting irrelevant experiences)

The Future of Agents and Environment in AI: 2026 and Beyond

Emerging Trends Reshaping Agent-Environment Interactions

According to comprehensive industry analysis from IBM Think’s AI trends report, several key developments are transforming how agents interact with environments:

Physical AI and Robotics: As IBM’s Peter Staar notes, “Robotics and physical AI are definitely going to pick up” in 2026. While large language models remain dominant, the industry is shifting toward AI that can sense, act, and learn in real physical environments rather than purely digital ones. This represents the next frontier for innovation beyond scaling existing models.

World Models: AI systems are developing sophisticated internal models of how environments work, enabling better prediction and planning. These “world models” allow agents to simulate potential actions mentally before executing them physically, dramatically improving decision quality and safety.

Embodied Intelligence: Rather than treating perception and action as separate components, embodied AI integrates sensing, reasoning, and movement into unified systems that learn through physical interaction with environments. As Maryna Bautina of SoftServe observes, “AI will move off the screen and into machines that learn through motion rather than code.”

Agent Sovereignty and Trust: For 93% of executives surveyed, factoring AI sovereignty into business strategy is essential in 2026. As agents become more autonomous, organizations must design systems that can explain their decisions even for complex outputs, building transparency and trust into agent architectures.

Democratization of Agent Creation

Kevin Chung of Writer highlights that “the ability to design and deploy intelligent agents is moving beyond developers into the hands of everyday business users.” This democratization will enable:

No-Code Agent Builders:

- Visual interfaces for defining agent goals and behaviors

- Pre-built agent templates for common use cases

- Integration wizards connecting agents to business systems

Domain-Specific Agent Platforms:

- Industry-specific agent frameworks (healthcare, finance, manufacturing)

- Compliance and governance built into platforms

- Best practices codified for particular environments

Collaborative Agent Design:

- Teams of business users, developers, and domain experts co-creating agents

- Rapid prototyping and testing

- Iterative improvement based on real-world performance

Enhanced Environment Understanding

Future agents and environment in AI systems will demonstrate dramatically improved environmental comprehension:

Contextual Awareness:

- Understanding not just current state but historical context

- Recognizing social and cultural nuances in human environments

- Adapting behavior to subtle environmental cues

Multi-Modal Sensing:

- Seamlessly integrating visual, auditory, textual, and sensor data

- Cross-modal reasoning (using sound to compensate for visual occlusions)

- Richer, more complete environmental models

Anticipatory Perception:

- Predicting environment changes before they occur

- Proactive positioning for optimal sensing

- Resource-efficient selective attention

Ethical and Societal Considerations

As agents become more autonomous and influential in their environments, ethical questions intensify:

Responsibility and Accountability:

- When an agent makes a harmful decision, who is responsible?

- How do we assign liability in complex multi-agent systems?

- What level of human oversight is appropriate for different risk levels?

Transparency vs. Performance:

- More interpretable agents may perform worse than black-box systems

- Finding the right balance for different applications

- Regulatory requirements for explainability

Job Displacement and Augmentation:

- Which tasks should be fully automated vs. human-AI collaboration?

- How do we ensure agents complement rather than replace workers?

- Training and transition support for affected employees

Environmental Impact:

- Energy consumption of large-scale agent deployments

- Physical resource usage by robotic agents

- Sustainability considerations in agent design

Frequently Asked Questions About Agents and Environment in AI

What is the difference between an AI agent and a regular software program?

Regular software programs follow predetermined logic—they execute the exact steps programmers coded. AI agents, in contrast, perceive their environment, make decisions based on goals and current conditions, and take actions that may not be explicitly programmed. Agents learn and adapt over time, while traditional programs remain static unless manually updated. The key difference is autonomy: agents can handle novel situations by reasoning about them, whereas programs can only execute predefined instructions.

How do AI agents learn about their environment?

AI agents learn through several mechanisms. Reinforcement learning allows agents to receive rewards or penalties based on action outcomes, gradually learning which behaviors succeed in their environment. Supervised learning uses labeled examples to teach pattern recognition. Self-reflective learning involves agents analyzing their past decisions to identify mistakes and improvements. Transfer learning lets agents apply knowledge from one environment to similar environments. Modern agentic systems often combine these approaches, continuously updating their understanding as they interact with environments.

Can AI agents work in environments they weren’t trained for?

This depends on how different the new environment is and how the agent was designed. Agents with strong transfer learning capabilities can often adapt to similar environments with some differences. However, dramatically different environments typically require retraining or significant adaptation. Emerging techniques like few-shot learning and meta-learning aim to create agents that generalize better to novel environments. The most robust approach currently involves human oversight when deploying agents in substantially new environments, with gradual autonomy increases as performance is validated.

What happens when multiple AI agents share the same environment?

When multiple agents share an environment, they must coordinate to avoid conflicts and achieve collective goals. This can happen through central orchestration (a master system directing all agents), peer-to-peer negotiation (agents communicating directly), or emergent coordination (agents adapting to each other’s behavior). Challenges include managing competing objectives, efficient resource allocation, and avoiding deadlocks. Well-designed multi-agent systems can solve problems too complex for any single agent, but require careful architecture and communication protocols.

How do you ensure AI agents make safe decisions in critical environments?

Safety in critical environments requires multiple layers of protection. Formal verification mathematically proves agents will behave within specified bounds. Human-in-the-loop systems require human approval for high-risk decisions. Redundancy and fail-safes provide backup systems when primary agents fail. Extensive simulation testing validates agent behavior in edge cases before real-world deployment. Continuous monitoring detects anomalous behavior requiring intervention. Constrained action spaces limit what agents can do in sensitive areas. The specific approach depends on the application’s risk profile and acceptable failure rates.

What are the biggest challenges in deploying AI agents in real-world environments?

The primary challenges include handling unpredictable situations not encountered during training, achieving reliable performance across diverse environmental conditions, scaling from controlled pilots to messy production environments, ensuring safety and managing failure modes, integrating with legacy systems and established workflows, maintaining agent performance as environments drift over time, balancing autonomy with appropriate human oversight, and addressing ethical concerns and potential societal impacts. According to industry research, while nearly two-thirds of organizations experiment with AI agents, fewer than one in four successfully scale them to production, largely due to these deployment challenges.

Conclusion: Mastering the Agent-Environment Relationship

Understanding agents and environment in AI is no longer optional for anyone involved in artificial intelligence—it’s fundamental to deploying systems that actually work in the real world. The relationship between an agent and its environment determines everything: what the agent can perceive, what actions it can take, how it learns, and ultimately whether it succeeds or fails at its assigned tasks. As we’ve explored throughout this guide, environments vary dramatically in their characteristics—from fully observable to partially hidden, from predictable to chaotic, from static to rapidly changing—and agent architectures must match these environmental realities. The most successful AI implementations in 2026 aren’t those with the most sophisticated algorithms or largest models; they’re those that thoughtfully align agent capabilities with environmental demands.

The explosive growth in agentic AI—projected to surge from $7.8 billion to over $52 billion by 2030—reflects growing organizational confidence in deploying autonomous systems. However, this success depends critically on understanding how agents and environment in AI interact. As we move toward multi-agent systems where specialized agents collaborate in shared environments, the complexity multiplies but so do the capabilities. From autonomous vehicles navigating physical streets to customer service agents managing digital interactions, from manufacturing robots in controlled factories to financial traders in volatile markets, the future belongs to systems that can perceive accurately, reason intelligently, act effectively, and learn continuously. By mastering these fundamental concepts, developers can build more robust agents, business leaders can deploy AI more strategically, and organizations can realize the transformative potential of autonomous intelligent systems while managing risks appropriately.

External Resources and Authoritative Citations

For readers seeking deeper technical knowledge and industry insights on agents and environment in AI:

- IBM AI Agents: Complete 2026 Guide – Comprehensive resource from IBM covering agent architecture, components, frameworks, governance, and enterprise implementation strategies

- TileDB: What is Agentic AI – Complete Guide – Technical deep-dive into agentic AI architecture, perception-reasoning-action cycles, and platform implementations

Social Media Hashtags: #AgenticAI #AIAgents #ArtificialIntelligence #MachineLearning #AutonomousSystems #MultiAgentSystems #ReinforcementLearning #AIEnvironment #IntelligentAgents #FutureOfAI #AITrends2026 #DeepLearning #RoboticAI #EnterpriseAI #AIInnovation

Schema Markup (JSON-LD):

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Agents and Environment in AI: Understanding the Foundation of Autonomous Intelligent Systems",

"description": "Comprehensive guide to how agents and environment in AI work together, covering perception, reasoning, action cycles, multi-agent systems, and real-world applications in 2026.",

"image": "https://rankrise1.com/images/agents-environment-ai-2026.jpg",

"author": {

"@type": "Organization",

"name": "RankRise1",

"url": "https://rankrise1.com"

},

"publisher": {

"@type": "Organization",

"name": "RankRise1",

"logo": {

"@type": "ImageObject",

"url": "https://rankrise1.com/logo.png"

}

},

"datePublished": "2026-01-09",

"dateModified": "2026-01-09",

"mainEntityOfPage": {

"@type": "WebPage",

"@id": "https://rankrise1.com/agents-environment-ai"

},

"about": {

"@type": "Thing",

"name": "AI Agents and Environments",

"description": "The fundamental relationship between artificial intelligence agents and the environments they perceive, reason about, and act within"

},

"keywords": "agents and environment in AI, AI agents, autonomous systems, multi-agent systems, perception reasoning action, agentic AI, intelligent agents, reinforcement learning"

}{

"@context": "https://schema.org",

"@type": "FAQPage",

"mainEntity": [

{

"@type": "Question",

"name": "What is the difference between an AI agent and a regular software program?",

"acceptedAnswer": {

"@type": "Answer",

"text": "Regular software programs follow predetermined logic and execute exact steps programmers coded. AI agents perceive their environment, make decisions based on goals and current conditions, and take actions that may not be explicitly programmed. Agents learn and adapt over time, while traditional programs remain static unless manually updated."

}

},

{

"@type": "Question",

"name": "How do AI agents learn about their environment?",

"acceptedAnswer": {

"@type": "Answer",

"text": "AI agents learn through reinforcement learning (receiving rewards/penalties for actions), supervised learning (labeled examples), self-reflective learning (analyzing past decisions), and transfer learning (applying knowledge across environments). Modern agentic systems combine these approaches, continuously updating